Congressional Elections, Logistic Regression, and Feature Selection

In the spirit of our recent election season (hah), I’ve decided to do some exploration in using boosted trees to identify features that have the most predictive power. Using those selected features and some propreitary intuition, I’ll then use a logistic model to predict whether a congressional district will be democrat or republican.

For comparison’s sake, I’ll begin by specifying a logistic model with features that I pick without any empirical evidence to back up my decision.

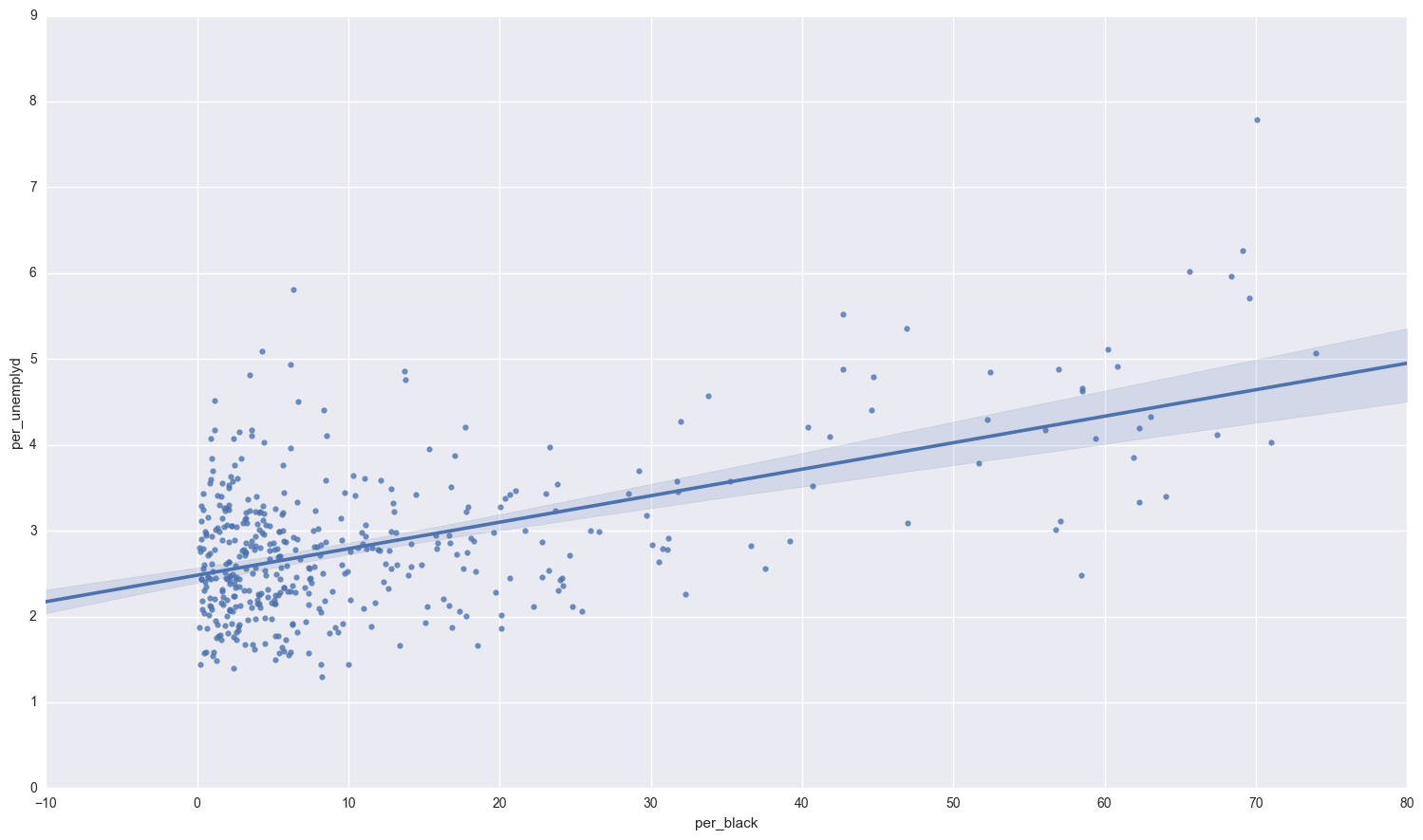

The data describe all 435 districts in the 105th congress from 1997-1998. It contains demographic and employment data for each district as well as a variable indicating whether the district’srepresentative in the House was a Republican or not. Republican districts were coded as 1 while Democratic/independent districts were coded as 0. There are a total of 31 variables, as described below:

import pandas as pd

import numpy as np

import statsmodels.api as sm

from statsmodels.discrete.discrete_model import Logit

import xgboost as xgb

from xgboost.sklearn import XGBClassifier

from __future__ import division

import seaborn as sns

from sklearn.metrics import confusion_matrix, classification_report

%matplotlib inline

import matplotlib.pylab as plt

from matplotlib.pylab import rcParams

rcParams['figure.figsize'] = 18.5, 10.5

congress = pd.read_stata("http://rlhick.people.wm.edu/econ407/data/congressional_105.dta" )

For the naive logit model, I will estimate

where per_ indicates that variable is a percentage of the district. I use percentages so that (presumably) the results are agnostic of the size of a district and can be generalized to any region in the US.

#making percentages

variables = ['unemplyd', 'age65', 'black', 'blucllr']

for v in variables:

congress['per_' + v] = congress[[v, 'populatn']].apply(lambda row: (row[0] / row[1])*100, axis = 1)

Before I do anything, I check for null values and get a sense of the data

congress.isnull().sum()

state 0

fipstate 0

sc 0

cd 0

repub 1

age65 0

black 0

blucllr 0

city 0

coast 0

construc 0

cvllbrfr 0

enroll 0

farmer 0

finance 0

forborn 0

gvtwrkr 0

intrland 0

landsqmi 0

mdnincm 0

miltinst 0

miltmajr 0

miltpop 0

nucplant 0

popsqmi 0

populatn 0

rurlfarm 0

transprt 0

unemplyd 0

union 0

urban 0

whlretl 0

dtype: int64

After doing some research on Oklahoma’s first congressional district, they had republican congressman Steve Largent from 1994 to 2002. I will code that row as republican.

congress.repub.fillna(value = 1, inplace = True)

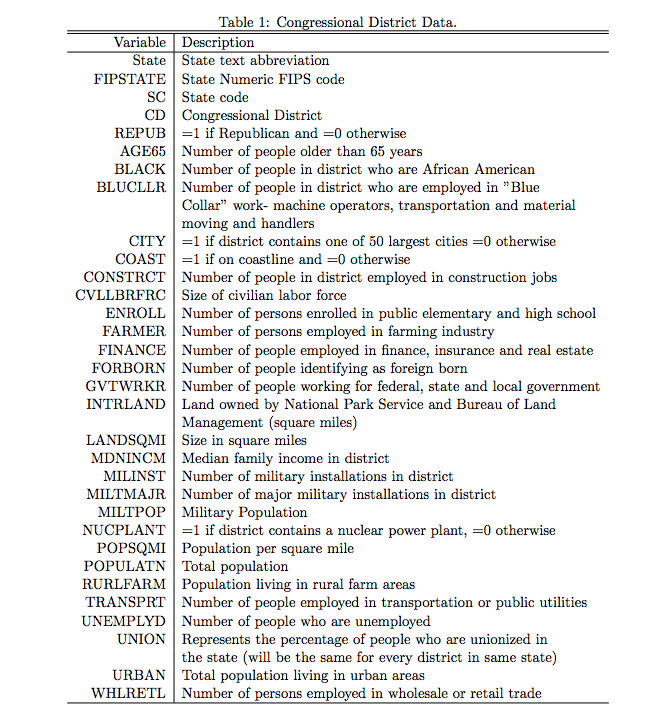

Lets see how balanced my data set is

sns.countplot(x = 'repub', data = congress, palette = 'muted');

print congress.repub.value_counts()

1.0 228

0.0 207

Name: repub, dtype: int64

So our data is fairly balanced, although republicans have the slight majority. Lets check out the relationship between the blue collar workers and black population:

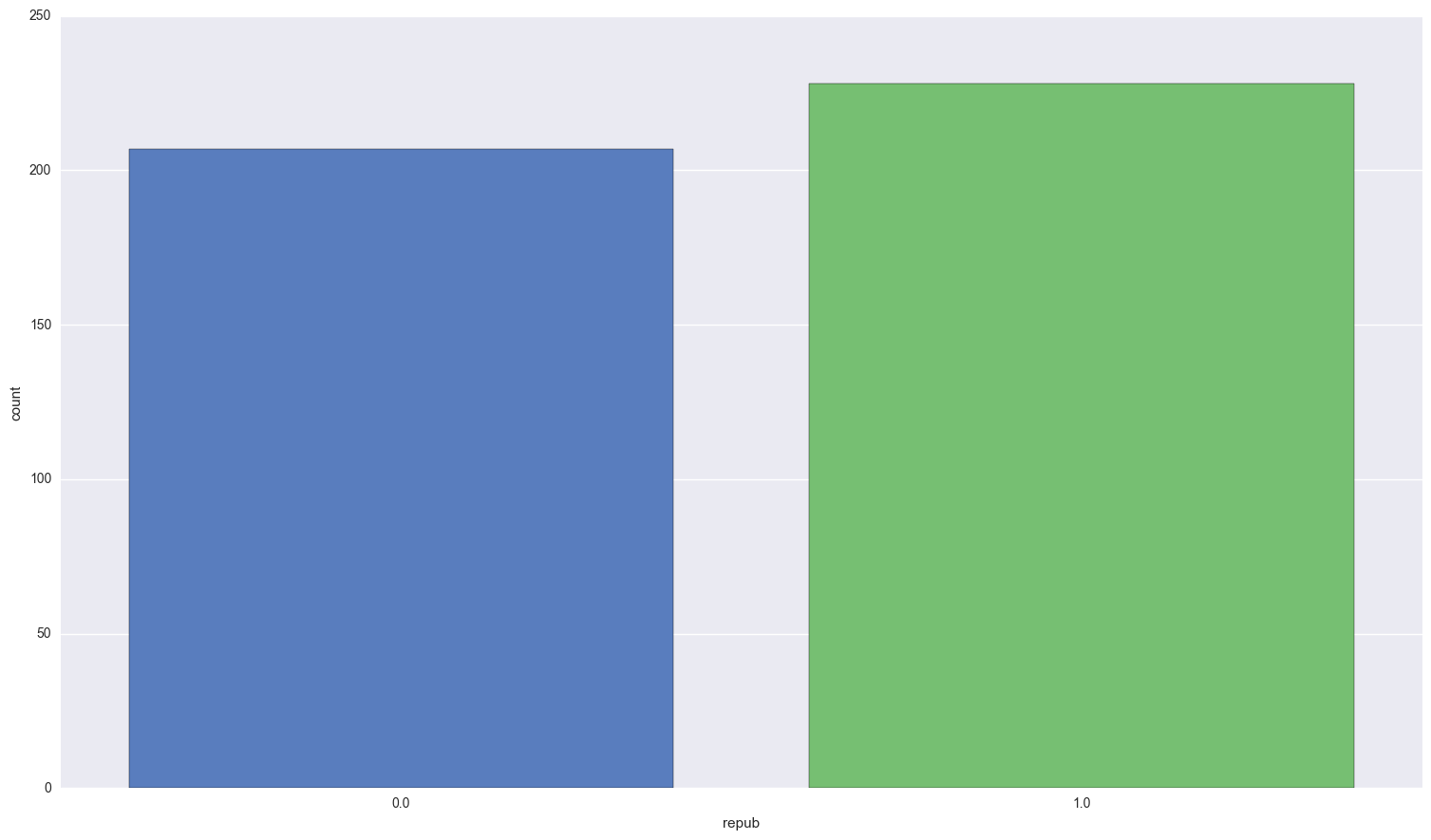

sns.regplot(congress.per_black, congress.per_blucllr);

There doesn’t seem to be a definite relationship between the percentage of blue collar workers and percentage of black population. What about unemployment and the black population?

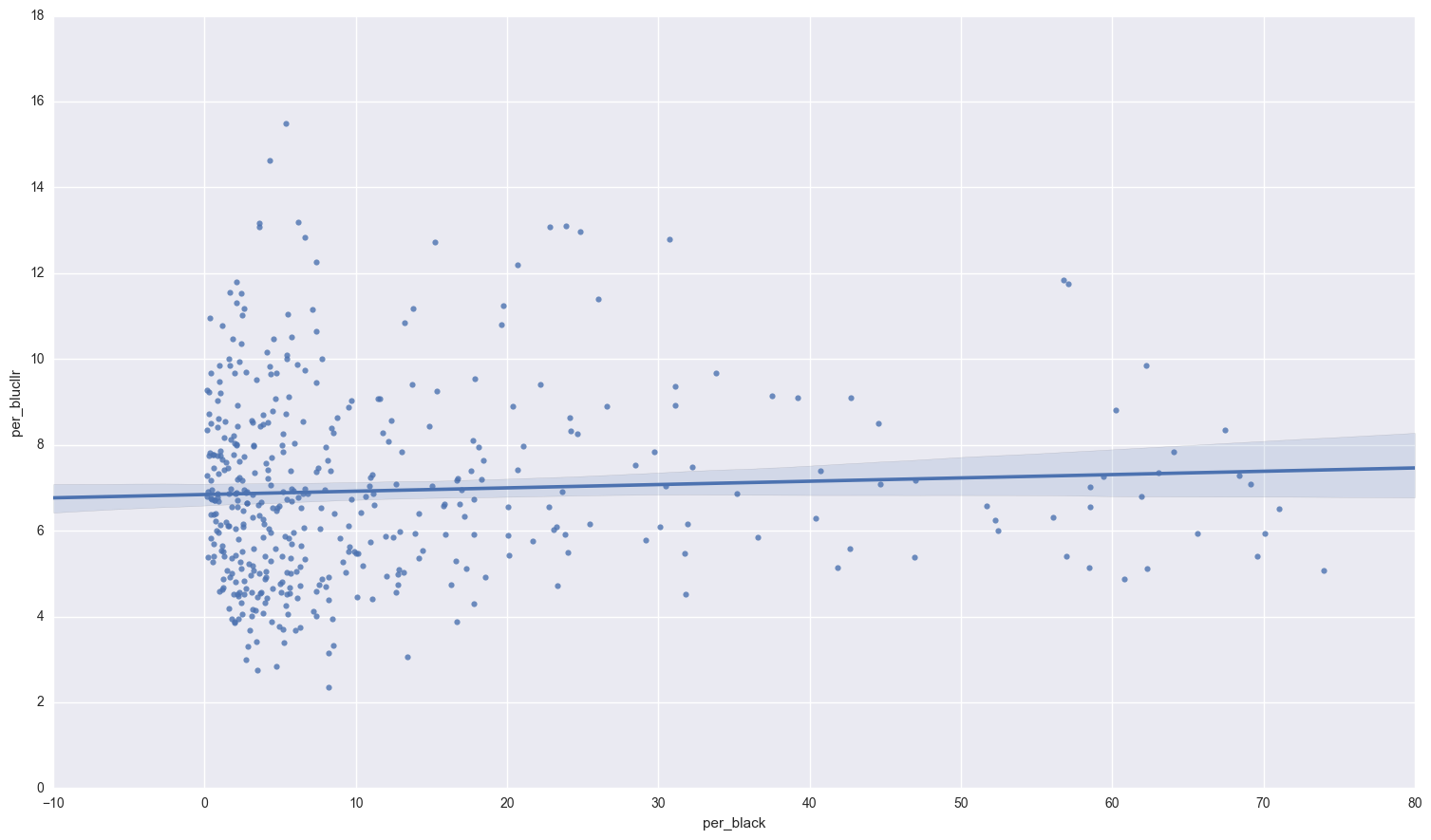

sns.regplot(congress.per_black, congress.per_unemplyd);

Those two variables are positively correlated.

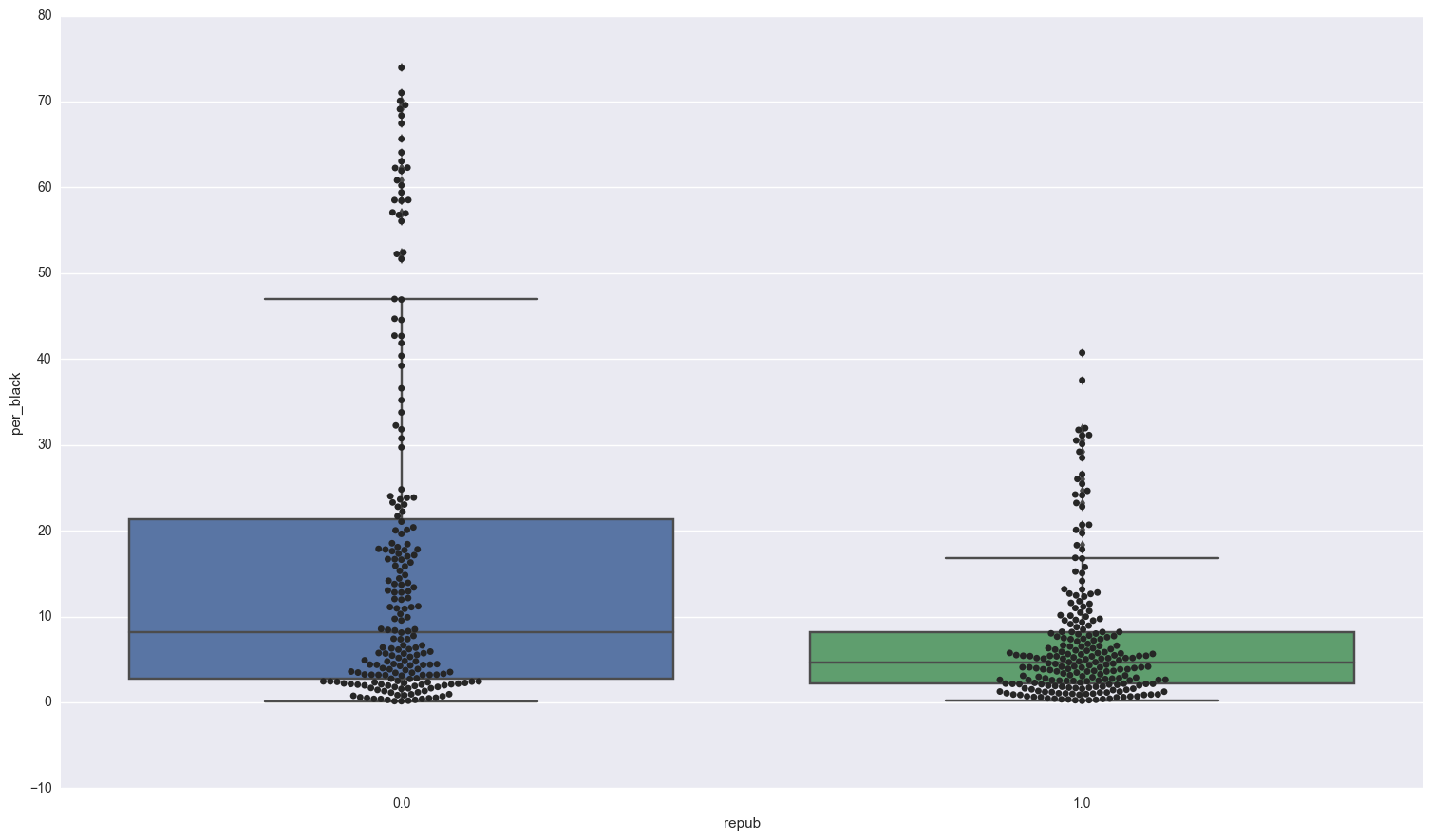

Lets take a look at differences in race that elected a republican congressman:

sns.boxplot(x = 'repub', y = 'per_black', data = congress );

sns.swarmplot(x = 'repub', y = 'per_black', data = congress, color = ".15" )

<matplotlib.axes._subplots.AxesSubplot at 0x119de4a10>

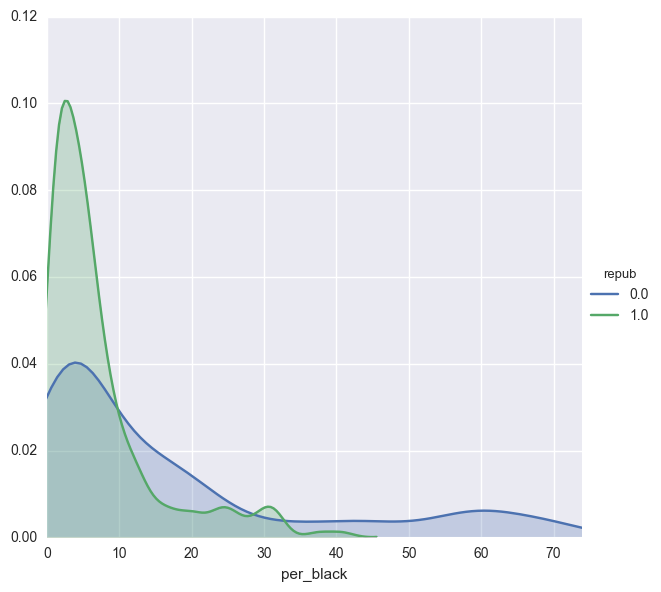

facetgrid = sns.FacetGrid(congress,hue='repub',size=6)

facetgrid.map(sns.kdeplot,'per_black',shade=True)

facetgrid.set(xlim=(0, congress.per_black.max()))

facetgrid.add_legend()

<seaborn.axisgrid.FacetGrid at 0x119e327d0>

print "Districts that went Republican: \n",congress[congress.repub == 1].per_black.describe()

print "\nDistricts that went Democrat: \n",congress[congress.repub == 0].per_black.describe()

Districts that went Republican:

count 228.000000

mean 7.139820

std 7.773183

min 0.178235

25% 2.148288

50% 4.678620

75% 8.204570

max 40.724916

Name: per_black, dtype: float64

Districts that went Democrat:

count 207.000000

mean 17.020763

std 20.083805

min 0.131355

25% 2.776919

50% 8.122120

75% 21.369574

max 73.948710

Name: per_black, dtype: float64

The median value for districts that went Democrat is 4 % higher than those that went republican. In addition to the districts that went Republican are more closely centered around the mean and median, where as in the districts that went Democrat, there is a significant discrepancy between the mean and median. In fact, 25 % of the data falls between 8.12 % and 21.36 % for Democratic districts, but in Republican districts that number is 4.6 % and 8.2 %.

I expect that the percentage of the population that is black will have significant predicitive power in our model.

Examine the correlation between our variables of interest:

ind_vars = [ 'per_age65', 'per_black', 'per_blucllr', 'city','mdnincm', 'per_unemplyd', 'union']

congress[ind_vars].corr()

| per_age65 | per_black | per_blucllr | city | mdnincm | per_unemplyd | union | |

|---|---|---|---|---|---|---|---|

| per_age65 | 1.000000 | -0.135644 | -0.003851 | -0.151725 | -0.176406 | -0.154773 | -0.016208 |

| per_black | -0.135644 | 1.000000 | 0.055332 | 0.232024 | -0.312837 | 0.549604 | -0.135479 |

| per_blucllr | -0.003851 | 0.055332 | 1.000000 | -0.198259 | -0.470878 | 0.110561 | -0.067490 |

| city | -0.151725 | 0.232024 | -0.198259 | 1.000000 | -0.002129 | 0.299911 | 0.089047 |

| mdnincm | -0.176406 | -0.312837 | -0.470878 | -0.002129 | 1.000000 | -0.505595 | 0.251465 |

| per_unemplyd | -0.154773 | 0.549604 | 0.110561 | 0.299911 | -0.505595 | 1.000000 | 0.167665 |

| union | -0.016208 | -0.135479 | -0.067490 | 0.089047 | 0.251465 | 0.167665 | 1.000000 |

Nothing seriously outrageous to see here. Percent black and unemployment have the highest correlation at 54.9%, followed by city and unemployment at 29.9%. Something to keep in mind when we are doing feature selections.

quick aside about MLE and Logit

I’m not going to go into a ton detail about logistic regression, because it has been done ad nauseam. If you would like more info about it, the wiki is a great place to start.

I’d like to detail a bit about MLE or maximum likelihood estimation in the context of the logit model. Stats models uses maximum likelihood to estimate the coefficients. In order to use MLE, we assume that the errors in our model are independent and identically Logit distributed. The cumulative logit function is given by:

The log-likelihood is is then:

When I run a logitstic model in statsmodels, it estimates parameters such that the above sum is maximized.

So now that we know what’s going on behind the scenes, lets heckin do it :)

dep_var = 'repub'

x_const = sm.add_constant(congress[ind_vars])

y = congress[dep_var]

#Logit and probit models

logit_results = Logit(y, x_const).fit()

logit_results.summary()

Optimization terminated successfully.

Current function value: 0.542084

Iterations 7

| Dep. Variable: | repub | No. Observations: | 435 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 427 |

| Method: | MLE | Df Model: | 7 |

| Date: | Sun, 15 Jan 2017 | Pseudo R-squ.: | 0.2166 |

| Time: | 12:59:27 | Log-Likelihood: | -235.81 |

| converged: | True | LL-Null: | -301.01 |

| LLR p-value: | 5.158e-25 |

| coef | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| const | 9.5500 | 1.618 | 5.902 | 0.000 | 6.378 12.722 |

| per_age65 | -0.1199 | 0.037 | -3.217 | 0.001 | -0.193 -0.047 |

| per_black | -0.0504 | 0.013 | -3.956 | 0.000 | -0.075 -0.025 |

| per_blucllr | -0.0712 | 0.063 | -1.135 | 0.256 | -0.194 0.052 |

| city | -0.6513 | 0.259 | -2.519 | 0.012 | -1.158 -0.145 |

| mdnincm | -5.843e-05 | 1.92e-05 | -3.037 | 0.002 | -9.61e-05 -2.07e-05 |

| per_unemplyd | -1.4488 | 0.233 | -6.210 | 0.000 | -1.906 -0.992 |

| union | -0.0280 | 0.016 | -1.705 | 0.088 | -0.060 0.004 |

logit_results.get_margeff(dummy = True).summary()

| Dep. Variable: | repub |

|---|---|

| Method: | dydx |

| At: | overall |

| dy/dx | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| per_age65 | -0.0221 | 0.007 | -3.363 | 0.001 | -0.035 -0.009 |

| per_black | -0.0093 | 0.002 | -4.215 | 0.000 | -0.014 -0.005 |

| per_blucllr | -0.0122 | 0.010 | -1.269 | 0.205 | -0.031 0.007 |

| city | -0.1201 | 0.046 | -2.586 | 0.010 | -0.211 -0.029 |

| mdnincm | -1.077e-05 | 3.4e-06 | -3.163 | 0.002 | -1.74e-05 -4.1e-06 |

| per_unemplyd | -0.2670 | 0.035 | -7.538 | 0.000 | -0.336 -0.198 |

| union | -0.0052 | 0.003 | -1.725 | 0.085 | -0.011 0.001 |

Before I look at how well the model is classifying, I’ll interpret the marginal effects:

For each variable that is a percentage, the marginal effect is the amount that the probability drops for each percent increase in that variable. Those include per_age65, per_black, per_bluecllr, per_unemplyd, and union. The most notable of these is that for every percentage increase in unemployment, the probability that the district elects a republican congressman or woman decreases by almost 27%!! Oh how the times have changed.

Another interesting result is that for every 10,000 $ increase in median income, the probability of a republican goes down by about 10.7 %. This goes against conventional wisdom that says that richer folks prefer republican canidates. Again, I think that if region was controlled for, the effect may be different.

Something that I think would be interesting to explore is how religious preferences within each district affect republican chances. Anyway, on to the classification reports

predictions = np.array([1 if x >= 0.5 else 0 for x in logit_results.predict()])

print classification_report(congress.repub, predictions)

print "Confusion matrix:\n"

print confusion_matrix(congress.repub, predictions)

precision recall f1-score support

0.0 0.74 0.67 0.70 207

1.0 0.72 0.78 0.75 228

avg / total 0.73 0.73 0.73 435

Confusion matrix:

[[139 68]

[ 50 178]]

Okay, so the model isn’t awful, although it could use some serious improvement. Democratic districts are only classified correctly (139 / (139 + 68)) = 67% of the time. Okay so that’s not very good at all. The pseudo R2 is 0.2166, which is okay. A note about that:

McFadden’s (pseudo) R2 = (1 - (log likelihood full model / log likelihood only intercept)). The log likelihood of the intercept model is treated as a total sum of squares, and the log likelihood of the full model is treated as the sum of squared errors (like in approach 1). The ratio of the likelihoods suggests the level of improvement over the intercept model offered by the full model. A higher R2 suggests a better model that explains more of the variance.

We proceed boldy forward by diving in to Feature Selection

Using a Boosted classifier to do Feature Selection

A cool feature of the xgboost library is that after it estimates all the trees, it calculates for each tree the amount that each attribute split point improves the performance measure, weighted by the number of observations the node is responsible for. The performance measure may be the purity (Gini index) used to select the split points or another more specific error function. For more information, check out this stack overflow question.

I start with using all variables except those that are identifying information like state, FIPS code etc. I also make the rest of the population count variables in to percentages so that they are comparable to other districts.

needs_convert = ['construc', 'cvllbrfr', 'enroll', 'farmer', 'finance', 'forborn', 'gvtwrkr', 'miltpop',

'rurlfarm', 'transprt', 'urban', 'whlretl']

for v in needs_convert:

congress['per_' + v] = congress[[v, 'populatn']].apply(lambda row: (row[0] / row[1])*100, axis = 1)

all_ind_vars = ['per_age65', 'per_black', 'per_blucllr', 'city','coast', 'per_construc', 'per_cvllbrfr',

'per_enroll','per_farmer', 'per_finance', 'per_forborn', 'per_gvtwrkr', 'intrland', 'landsqmi',

'mdnincm', 'miltinst', 'miltmajr', 'per_miltpop', 'nucplant', 'popsqmi','populatn', 'per_rurlfarm',

'per_transprt', 'per_unemplyd', 'union', 'per_urban','per_whlretl']

boosted = XGBClassifier(max_depth= 4, objective= 'binary:logistic')

boosted.fit(congress[all_ind_vars], congress.repub)

XGBClassifier(base_score=0.5, colsample_bylevel=1, colsample_bytree=1,

gamma=0, learning_rate=0.1, max_delta_step=0, max_depth=4,

min_child_weight=1, missing=None, n_estimators=100, nthread=-1,

objective='binary:logistic', reg_alpha=0, reg_lambda=1,

scale_pos_weight=1, seed=0, silent=True, subsample=1)

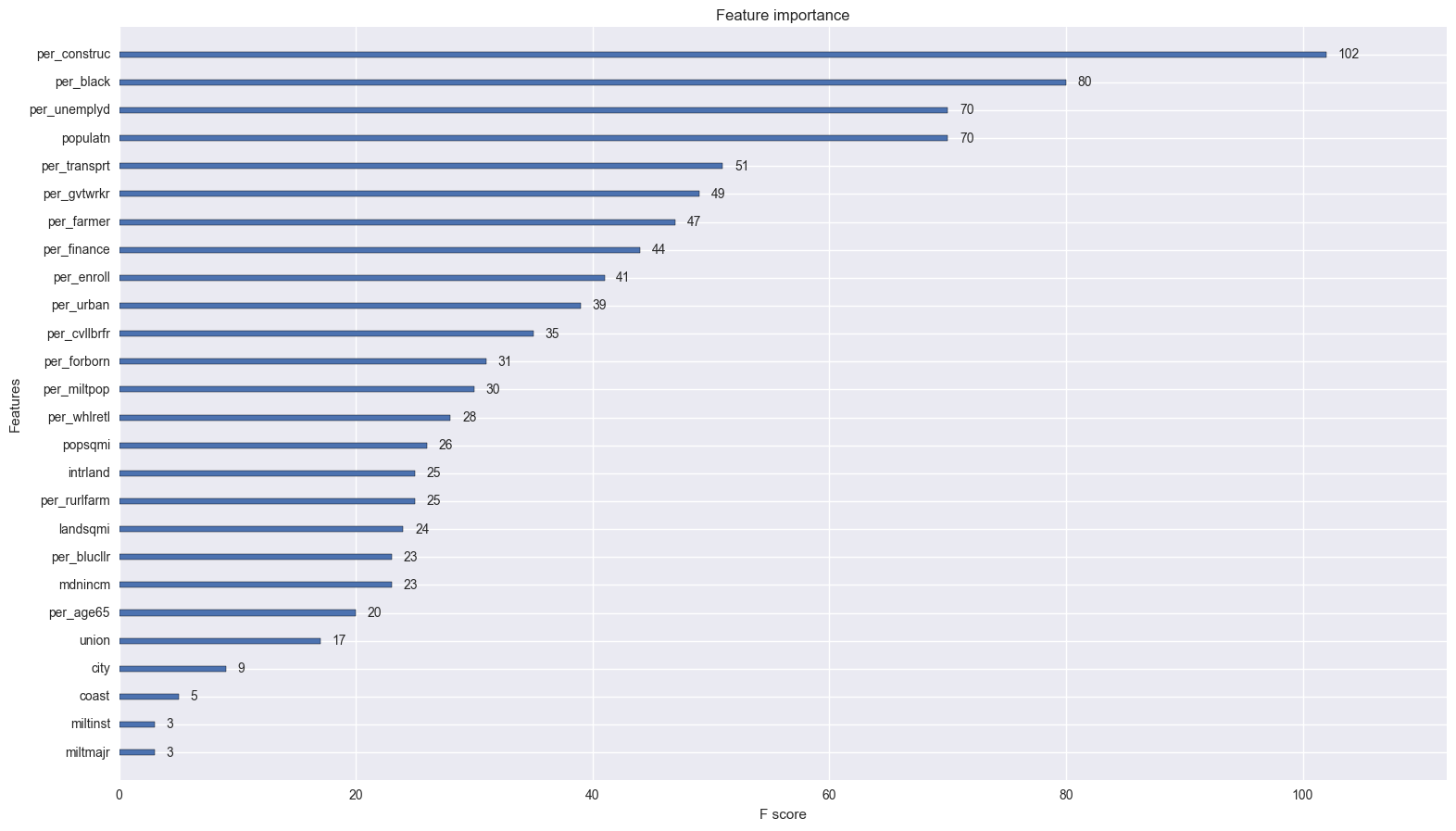

xgb.plot_importance(boosted.booster());

Some surprising results, some expected results.

Unexpected:

The percentage of the population that works construction is the most important feature… interesting. Personally, I would consider construction work to be blue collar work, however, they weren’t counted as part of the population that constituted blue collar workers. Maybe instead of campaigning with the steel workers, congressmen should pander to construction unions (do those even exist?). The size of the district also plays a significant role. It will be interesting to see whether size contributes positively or negatively to the probability of a republican.

Government, transport and utility workers also contribute significantly. A common theme here is features that are most important are either directly related to government work or somehow often implicated with government. Indeed, whether a person is employed or not seriously colors their view of how the government should be run.

Expected

I was right about unemployment and the black population playing a large role in elections! Sweet validation.

New Model

The new model I will estimate is:

I include all the top four variables that were selected for by the boosted trees. All of those features, with the exception of black, are indicators of employment. To control for age, race, and economic status of the district I include percentage black, over age 65, and median income. I include the amount of National Park land because that land is owned by the government, and provides employment and tourist attractions for citizens. City and coast are to control for urban populations and location respectively. Coast especially should account for the democratic “coastal elite”. Enroll is to control for those voters whose children are enrolled in public schools, also a factor that would color their view of the government.

Lets let er rip

new_vars = ['per_construc', 'per_black', 'per_unemplyd', 'per_transprt', 'per_gvtwrkr', 'per_enroll', 'per_age65',

'mdnincm','populatn', 'intrland', 'city', 'coast' ]

new_x_const = sm.add_constant(congress[new_vars])

new_model = Logit(congress.repub, new_x_const).fit()

new_model.summary()

Optimization terminated successfully.

Current function value: 0.504768

Iterations 7

| Dep. Variable: | repub | No. Observations: | 435 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 422 |

| Method: | MLE | Df Model: | 12 |

| Date: | Sun, 15 Jan 2017 | Pseudo R-squ.: | 0.2705 |

| Time: | 12:59:28 | Log-Likelihood: | -219.57 |

| converged: | True | LL-Null: | -301.01 |

| LLR p-value: | 1.362e-28 |

| coef | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| const | -2.1656 | 3.265 | -0.663 | 0.507 | -8.566 4.235 |

| per_construc | 0.3077 | 0.210 | 1.466 | 0.143 | -0.104 0.719 |

| per_black | -0.0291 | 0.013 | -2.304 | 0.021 | -0.054 -0.004 |

| per_unemplyd | -1.7545 | 0.268 | -6.558 | 0.000 | -2.279 -1.230 |

| per_transprt | -0.1287 | 0.220 | -0.585 | 0.559 | -0.560 0.303 |

| per_gvtwrkr | -0.1081 | 0.064 | -1.680 | 0.093 | -0.234 0.018 |

| per_enroll | 0.2008 | 0.080 | 2.524 | 0.012 | 0.045 0.357 |

| per_age65 | -0.0035 | 0.055 | -0.063 | 0.949 | -0.111 0.104 |

| mdnincm | -1.363e-05 | 2.27e-05 | -0.602 | 0.547 | -5.8e-05 3.08e-05 |

| populatn | 9.015e-06 | 3.18e-06 | 2.837 | 0.005 | 2.79e-06 1.52e-05 |

| intrland | 2.114e-08 | 1.15e-08 | 1.846 | 0.065 | -1.31e-09 4.36e-08 |

| city | -0.4814 | 0.286 | -1.685 | 0.092 | -1.041 0.079 |

| coast | -0.6496 | 0.267 | -2.432 | 0.015 | -1.173 -0.126 |

new_predictions = np.array([1 if x >= 0.5 else 0 for x in new_model.predict()])

print classification_report(congress.repub, new_predictions)

print "Confusion matrix:\n"

print confusion_matrix(congress.repub, new_predictions)

precision recall f1-score support

0.0 0.76 0.68 0.72 207

1.0 0.73 0.81 0.77 228

avg / total 0.75 0.74 0.74 435

Confusion matrix:

[[140 67]

[ 44 184]]

Our pseudo R2 improves significantly from 0.2166 to 0.2705. The precision and recall also improve slightly, although they are still not what I would like them to be. Lets look at the marginal effects:

new_model.get_margeff(dummy=True).summary()

| Dep. Variable: | repub |

|---|---|

| Method: | dydx |

| At: | overall |

| dy/dx | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| per_construc | 0.0521 | 0.035 | 1.480 | 0.139 | -0.017 0.121 |

| per_black | -0.0049 | 0.002 | -2.351 | 0.019 | -0.009 -0.001 |

| per_unemplyd | -0.2972 | 0.036 | -8.170 | 0.000 | -0.368 -0.226 |

| per_transprt | -0.0218 | 0.037 | -0.586 | 0.558 | -0.095 0.051 |

| per_gvtwrkr | -0.0183 | 0.011 | -1.701 | 0.089 | -0.039 0.003 |

| per_enroll | 0.0340 | 0.013 | 2.588 | 0.010 | 0.008 0.060 |

| per_age65 | -0.0006 | 0.009 | -0.063 | 0.949 | -0.019 0.018 |

| mdnincm | -2.309e-06 | 3.83e-06 | -0.603 | 0.547 | -9.82e-06 5.2e-06 |

| populatn | 1.527e-06 | 5.22e-07 | 2.926 | 0.003 | 5.04e-07 2.55e-06 |

| intrland | 3.604e-09 | 1.94e-09 | 1.854 | 0.064 | -2.06e-10 7.41e-09 |

| city | -0.0834 | 0.050 | -1.668 | 0.095 | -0.181 0.015 |

| coast | -0.1100 | 0.044 | -2.495 | 0.013 | -0.196 -0.024 |

Percent unemployment remains the biggest factor for probability of republican, followed by population and then percentage of the population that is black. Interestingly, a larger population benefits republican canidates. So does having children enrolled in public schools.

What would happen if we controlled for region of the country with a series of dummy variables?

# these are regions as defined by the bureau of economic analysis

regions = {'new_england':['CT', 'ME', 'MA', 'NH', 'RI', 'VT'] ,

'mid_east': ['DE', 'DC', 'MD', 'NJ', 'NY', 'PA'],

'great_lakes': ['IL', 'IN', 'MI', 'OH', 'WI'],

'plains': ['IA', 'KS', 'MN', 'MO', 'NE', 'ND', 'SD'],

'south_east':['AL', 'AR', 'FL', 'GA', 'KY', 'LA', 'MS', 'NC', 'SC', 'TN', 'VA', 'WV'],

'south_west': ['AZ', 'NM', 'OK', 'TX'],

'rocky_mountain': ['CO', 'ID', 'MT', 'UT', 'WY'],

'far_west': ['CA', 'AK', 'HI', 'NV', 'OR', 'WA']}

#assigns 1 to that column if state is in that region

for key,val in regions.iteritems():

congress['in_' + key] = congress.state.apply(lambda state: 1 if state in regions[key] else 0)

controlled_region = ['per_construc', 'per_black', 'per_unemplyd', 'per_transprt', 'per_gvtwrkr', 'per_enroll',

'per_age65', 'mdnincm','populatn', 'intrland', 'city', 'coast', 'in_rocky_mountain',

'in_plains', 'in_new_england', 'in_great_lakes', 'in_mid_east', 'in_south_west', 'in_south_east',

'in_far_west']

controlled_model = Logit(congress.repub, sm.add_constant(congress[controlled_region])).fit()

controlled_model.summary()

Optimization terminated successfully.

Current function value: 0.482721

Iterations 7

/Users/benjamindykstra/anaconda/lib/python2.7/site-packages/statsmodels/base/model.py:971: RuntimeWarning: invalid value encountered in sqrt

return np.sqrt(np.diag(self.cov_params()))

/Users/benjamindykstra/anaconda/lib/python2.7/site-packages/scipy/stats/_distn_infrastructure.py:875: RuntimeWarning: invalid value encountered in greater

return (self.a < x) & (x < self.b)

/Users/benjamindykstra/anaconda/lib/python2.7/site-packages/scipy/stats/_distn_infrastructure.py:875: RuntimeWarning: invalid value encountered in less

return (self.a < x) & (x < self.b)

/Users/benjamindykstra/anaconda/lib/python2.7/site-packages/scipy/stats/_distn_infrastructure.py:1814: RuntimeWarning: invalid value encountered in less_equal

cond2 = cond0 & (x <= self.a)

| Dep. Variable: | repub | No. Observations: | 435 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 415 |

| Method: | MLE | Df Model: | 19 |

| Date: | Sun, 15 Jan 2017 | Pseudo R-squ.: | 0.3024 |

| Time: | 12:59:29 | Log-Likelihood: | -209.98 |

| converged: | True | LL-Null: | -301.01 |

| LLR p-value: | 1.217e-28 |

| coef | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| const | -3.1413 | nan | nan | nan | nan nan |

| per_construc | 0.2647 | 0.246 | 1.076 | 0.282 | -0.217 0.747 |

| per_black | -0.0504 | 0.016 | -3.177 | 0.001 | -0.081 -0.019 |

| per_unemplyd | -1.6023 | 0.321 | -4.997 | 0.000 | -2.231 -0.974 |

| per_transprt | -0.1629 | 0.239 | -0.681 | 0.496 | -0.632 0.306 |

| per_gvtwrkr | -0.1043 | 0.071 | -1.466 | 0.143 | -0.244 0.035 |

| per_enroll | 0.2362 | 0.090 | 2.637 | 0.008 | 0.061 0.412 |

| per_age65 | -0.0028 | 0.058 | -0.048 | 0.962 | -0.117 0.111 |

| mdnincm | 1.224e-05 | 2.77e-05 | 0.442 | 0.659 | -4.21e-05 6.65e-05 |

| populatn | 8.1e-06 | 3.12e-06 | 2.597 | 0.009 | 1.99e-06 1.42e-05 |

| intrland | 5.69e-08 | 3.16e-08 | 1.799 | 0.072 | -5.08e-09 1.19e-07 |

| city | -0.3625 | 0.332 | -1.092 | 0.275 | -1.014 0.288 |

| coast | -0.5590 | 0.283 | -1.977 | 0.048 | -1.113 -0.005 |

| in_rocky_mountain | 0.0798 | nan | nan | nan | nan nan |

| in_plains | -0.8275 | nan | nan | nan | nan nan |

| in_new_england | -1.3708 | nan | nan | nan | nan nan |

| in_great_lakes | -0.0533 | nan | nan | nan | nan nan |

| in_mid_east | 0.0881 | nan | nan | nan | nan nan |

| in_south_west | -0.0952 | nan | nan | nan | nan nan |

| in_south_east | 0.5559 | nan | nan | nan | nan nan |

| in_far_west | -1.5183 | nan | nan | nan | nan nan |

There seems to be a problem with the parameter estimates on the regions. Lets check the rank of our matrix and covariance matrix

np.linalg.matrix_rank(sm.add_constant(congress[controlled_region])), sm.add_constant(congress[controlled_region]).shape[1]

(20, 21)

controlled_model.cov_params()[['in_rocky_mountain','in_plains', 'in_new_england', 'in_great_lakes', 'in_mid_east',

'in_south_west', 'in_south_east', 'in_far_west']]

| in_rocky_mountain | in_plains | in_new_england | in_great_lakes | in_mid_east | in_south_west | in_south_east | in_far_west | |

|---|---|---|---|---|---|---|---|---|

| const | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 | 6.386492e+13 |

| per_construc | -1.091468e-01 | -2.017659e-02 | 9.513134e-03 | -1.286969e-02 | -7.181959e-03 | -4.320845e-02 | 3.096794e-03 | 4.533879e-02 |

| per_black | 1.607985e-03 | 8.341282e-03 | 1.636867e-02 | 6.578895e-03 | 9.300608e-03 | 7.842342e-03 | 9.308403e-03 | 1.461101e-02 |

| per_unemplyd | -3.982602e-01 | -3.711613e-01 | -5.241872e-01 | -4.307269e-01 | -4.567296e-01 | -4.366227e-01 | -4.272142e-01 | -3.913676e-01 |

| per_transprt | -4.637134e-01 | -4.552612e-01 | -4.104197e-01 | -4.224048e-01 | -4.154182e-01 | -4.295588e-01 | -4.359294e-01 | -4.114073e-01 |

| per_gvtwrkr | -1.343203e-01 | -1.268796e-01 | -1.290734e-01 | -1.220045e-01 | -1.277040e-01 | -1.271874e-01 | -1.251051e-01 | -1.230510e-01 |

| per_enroll | -3.792752e-01 | -3.790564e-01 | -3.611060e-01 | -3.638837e-01 | -3.476087e-01 | -3.723100e-01 | -3.569134e-01 | -3.560876e-01 |

| per_age65 | -2.210222e-01 | -2.252882e-01 | -2.237586e-01 | -2.175076e-01 | -2.148911e-01 | -2.172481e-01 | -2.176205e-01 | -2.048646e-01 |

| mdnincm | -1.035904e-04 | -1.033100e-04 | -1.089805e-04 | -1.036229e-04 | -1.024194e-04 | -1.023991e-04 | -9.982727e-05 | -9.807287e-05 |

| populatn | -6.235154e-06 | -6.505035e-06 | -6.332263e-06 | -6.145872e-06 | -5.934361e-06 | -6.173450e-06 | -6.200979e-06 | -5.638813e-06 |

| intrland | -9.919726e-09 | -1.147271e-09 | 4.531544e-09 | 6.193129e-10 | 1.794346e-09 | -2.347084e-09 | 3.778531e-10 | -1.691406e-08 |

| city | -2.428479e-01 | -2.577943e-01 | -2.339646e-01 | -2.585814e-01 | -2.425629e-01 | -3.206876e-01 | -2.494138e-01 | -2.831823e-01 |

| coast | 1.471563e-02 | 2.524741e-02 | 1.110886e-02 | 4.183857e-03 | 1.168595e-02 | 1.428282e-02 | 1.356147e-02 | -2.250626e-02 |

| in_rocky_mountain | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_plains | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_new_england | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_great_lakes | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_mid_east | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_south_west | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_south_east | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

| in_far_west | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 | -6.386492e+13 |

So the matrix is not quite full rank and there is very little covariance between some of the dummy variable parameters. This is probably the cause of the problems.

Marginal effects:

controlled_model.get_margeff(dummy = True).summary()

/Users/benjamindykstra/anaconda/lib/python2.7/site-packages/statsmodels/discrete/discrete_margins.py:345: RuntimeWarning: invalid value encountered in sqrt

return cov_me, np.sqrt(np.diag(cov_me))

| Dep. Variable: | repub |

|---|---|

| Method: | dydx |

| At: | overall |

| dy/dx | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| per_construc | 0.0426 | 0.039 | 1.081 | 0.280 | -0.035 0.120 |

| per_black | -0.0081 | 0.002 | -3.357 | 0.001 | -0.013 -0.003 |

| per_unemplyd | -0.2576 | 0.047 | -5.535 | 0.000 | -0.349 -0.166 |

| per_transprt | -0.0262 | 0.038 | -0.682 | 0.495 | -0.101 0.049 |

| per_gvtwrkr | -0.0168 | 0.011 | -1.472 | 0.141 | -0.039 0.006 |

| per_enroll | 0.0380 | 0.014 | 2.797 | 0.005 | 0.011 0.065 |

| per_age65 | -0.0004 | 0.009 | -0.047 | 0.962 | -0.019 0.018 |

| mdnincm | 1.968e-06 | 4.43e-06 | 0.444 | 0.657 | -6.72e-06 1.07e-05 |

| populatn | 1.302e-06 | 4.76e-07 | 2.734 | 0.006 | 3.69e-07 2.24e-06 |

| intrland | 9.066e-09 | 4.82e-09 | 1.882 | 0.060 | -3.77e-10 1.85e-08 |

| city | -0.0595 | 0.055 | -1.075 | 0.282 | -0.168 0.049 |

| coast | -0.0920 | 0.047 | -1.963 | 0.050 | -0.184 -0.000 |

| in_rocky_mountain | 0.0128 | nan | nan | nan | nan nan |

| in_plains | -0.1341 | nan | nan | nan | nan nan |

| in_new_england | -0.2272 | nan | nan | nan | nan nan |

| in_great_lakes | -0.0086 | nan | nan | nan | nan nan |

| in_mid_east | 0.0141 | nan | nan | nan | nan nan |

| in_south_west | -0.0154 | nan | nan | nan | nan nan |

| in_south_east | 0.0883 | nan | nan | nan | nan nan |

| in_far_west | -0.2441 | nan | nan | nan | nan nan |

new_predictions = np.array([1 if x >= 0.5 else 0 for x in controlled_model.predict()])

print classification_report(congress.repub, new_predictions)

print "Confusion matrix:\n"

print confusion_matrix(congress.repub, new_predictions)

precision recall f1-score support

0.0 0.80 0.71 0.75 207

1.0 0.76 0.83 0.80 228

avg / total 0.78 0.78 0.78 435

Confusion matrix:

[[148 59]

[ 38 190]]

The model improves both true negatives and true positives. The R2 is also at a high of 0.3024 because of the better log likelihood value. It seems controlling for region of the country really helped out the model with its predictive accuracy. According to the marginal effects, a district in the south east has a 8 % chance higher of being republican, all else equal. Employment is still the most significant factore in the model and has the highest effect on probability as in the other models.

Using Sklearn’s Recursive Feature Elimination for Feature Selection

For comparison’s sake, I will use recursive feature elimination to select features from the dataset. From sklearn’s documentation:

Features whose absolute weights are the smallest are pruned from the current set features. That procedure is recursively repeated on the pruned set until the desired number of features to select is eventually reached.

In order to use this method, I will need to use sklearn’s logistic regression instead of statsmodels. Something to be aware of is the regularization term ‘C’ in sklearn - it needs to be set to an extremely high value so that the parameter estimates are the same as statsmodels.

from sklearn.feature_selection import RFECV

from sklearn.linear_model import LogisticRegression

new_ind_vars = ['per_age65', 'per_black', 'per_blucllr', 'city', 'coast', 'per_construc','per_cvllbrfr',

'per_enroll','per_farmer','per_finance','per_forborn','per_gvtwrkr','intrland','landsqmi',

'mdnincm','miltinst','miltmajr','per_miltpop','nucplant','popsqmi','populatn','per_rurlfarm',

'per_transprt','per_unemplyd','union','per_urban','per_whlretl','in_rocky_mountain', 'in_plains',

'in_new_england','in_great_lakes', 'in_mid_east', 'in_south_west', 'in_south_east','in_far_west']

x = sm.add_constant(congress[new_ind_vars])

y = congress.repub

#cross validated recursive feature elimination, with auc scoring

rfecv = RFECV(estimator= LogisticRegression(C = 1e10),scoring = 'roc_auc' )

x_new = rfecv.fit_transform(x, y)

print x_new.shape, x.shape

#get the selected features

good_features = []

for i in range(len(x.columns.values)):

if rfecv.support_[i]:

good_features.append(x.columns.values[i])

print "The selected features: \n", good_features

(435, 14) (435, 36)

The selected features:

['const', 'per_age65', 'city', 'coast', 'per_construc', 'per_cvllbrfr', 'per_finance', 'per_gvtwrkr', 'miltinst', 'per_miltpop', 'per_transprt', 'per_unemplyd', 'in_rocky_mountain', 'in_new_england']

A lot of the same features that were previously included by xgboost were selected for. Thats rather encouraging. I’ll fit the statsmodels logit to the data using the new features now.

selected_model = Logit(congress.repub, x[good_features]).fit()

selected_model.summary()

Optimization terminated successfully.

Current function value: 0.497313

Iterations 7

| Dep. Variable: | repub | No. Observations: | 435 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 421 |

| Method: | MLE | Df Model: | 13 |

| Date: | Sun, 15 Jan 2017 | Pseudo R-squ.: | 0.2813 |

| Time: | 12:59:33 | Log-Likelihood: | -216.33 |

| converged: | True | LL-Null: | -301.01 |

| LLR p-value: | 2.489e-29 |

| coef | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| const | 18.5263 | 3.283 | 5.644 | 0.000 | 12.092 24.960 |

| per_age65 | -0.1854 | 0.050 | -3.703 | 0.000 | -0.284 -0.087 |

| city | -0.6637 | 0.299 | -2.220 | 0.026 | -1.250 -0.078 |

| coast | -0.5589 | 0.267 | -2.090 | 0.037 | -1.083 -0.035 |

| per_construc | 0.4259 | 0.209 | 2.039 | 0.041 | 0.017 0.835 |

| per_cvllbrfr | -0.2094 | 0.046 | -4.595 | 0.000 | -0.299 -0.120 |

| per_finance | 0.3566 | 0.142 | 2.518 | 0.012 | 0.079 0.634 |

| per_gvtwrkr | -0.2279 | 0.068 | -3.355 | 0.001 | -0.361 -0.095 |

| miltinst | 0.3400 | 0.117 | 2.899 | 0.004 | 0.110 0.570 |

| per_miltpop | -0.4299 | 0.123 | -3.485 | 0.000 | -0.672 -0.188 |

| per_transprt | -0.3541 | 0.230 | -1.538 | 0.124 | -0.806 0.097 |

| per_unemplyd | -1.9399 | 0.283 | -6.857 | 0.000 | -2.494 -1.385 |

| in_rocky_mountain | 1.1965 | 0.909 | 1.316 | 0.188 | -0.585 2.978 |

| in_new_england | -1.0359 | 0.673 | -1.538 | 0.124 | -2.356 0.284 |

selected_model.get_margeff(dummy = True).summary()

| Dep. Variable: | repub |

|---|---|

| Method: | dydx |

| At: | overall |

| dy/dx | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| per_age65 | -0.0168 | 0.001 | -18.297 | 0.000 | -0.019 -0.015 |

| city | -0.1133 | 0.051 | -2.205 | 0.027 | -0.214 -0.013 |

| coast | -0.0928 | 0.044 | -2.130 | 0.033 | -0.178 -0.007 |

| per_construc | 0.0707 | 0.034 | 2.073 | 0.038 | 0.004 0.138 |

| per_cvllbrfr | -0.0348 | 0.007 | -5.029 | 0.000 | -0.048 -0.021 |

| per_finance | 0.0592 | 0.023 | 2.584 | 0.010 | 0.014 0.104 |

| per_gvtwrkr | -0.0378 | 0.011 | -3.518 | 0.000 | -0.059 -0.017 |

| miltinst | 0.0565 | 0.019 | 3.004 | 0.003 | 0.020 0.093 |

| per_miltpop | -0.0714 | 0.019 | -3.672 | 0.000 | -0.109 -0.033 |

| per_transprt | -0.0588 | 0.038 | -1.553 | 0.120 | -0.133 0.015 |

| per_unemplyd | -0.0394 | 0.014 | -2.767 | 0.006 | -0.067 -0.011 |

| in_rocky_mountain | 0.1838 | 0.123 | 1.500 | 0.134 | -0.056 0.424 |

| in_new_england | -0.1720 | 0.111 | -1.554 | 0.120 | -0.389 0.045 |

Wow, so in this model percent unemployment doesn’t have nearly the same maginitude effect that it does in the other models. However, I like what I see with regions - New England has always been very liberal, and that is reflected in the dummy variables ‘in_new_england’ and ‘coast’.

Interestingly, percentage black was not selected for.. I still believe that that is a very important feature and should be included. Lets add it in and reexamine the results.

good_features.append('per_black')

new_selected_model = Logit(congress.repub, x[good_features]).fit()

new_selected_model.summary()

Optimization terminated successfully.

Current function value: 0.488322

Iterations 7

| Dep. Variable: | repub | No. Observations: | 435 |

|---|---|---|---|

| Model: | Logit | Df Residuals: | 420 |

| Method: | MLE | Df Model: | 14 |

| Date: | Sun, 15 Jan 2017 | Pseudo R-squ.: | 0.2943 |

| Time: | 12:59:33 | Log-Likelihood: | -212.42 |

| converged: | True | LL-Null: | -301.01 |

| LLR p-value: | 2.411e-30 |

| coef | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| const | 17.5971 | 3.363 | 5.233 | 0.000 | 11.006 24.188 |

| per_age65 | -0.1827 | 0.050 | -3.620 | 0.000 | -0.282 -0.084 |

| city | -0.7272 | 0.304 | -2.390 | 0.017 | -1.324 -0.131 |

| coast | -0.6234 | 0.271 | -2.302 | 0.021 | -1.154 -0.093 |

| per_construc | 0.4754 | 0.214 | 2.223 | 0.026 | 0.056 0.895 |

| per_cvllbrfr | -0.2059 | 0.046 | -4.429 | 0.000 | -0.297 -0.115 |

| per_finance | 0.3566 | 0.142 | 2.505 | 0.012 | 0.078 0.636 |

| per_gvtwrkr | -0.1804 | 0.071 | -2.545 | 0.011 | -0.319 -0.041 |

| miltinst | 0.3094 | 0.118 | 2.617 | 0.009 | 0.078 0.541 |

| per_miltpop | -0.3837 | 0.123 | -3.120 | 0.002 | -0.625 -0.143 |

| per_transprt | -0.3083 | 0.233 | -1.323 | 0.186 | -0.765 0.149 |

| per_unemplyd | -1.7545 | 0.294 | -5.959 | 0.000 | -2.332 -1.177 |

| in_rocky_mountain | 0.8609 | 0.931 | 0.925 | 0.355 | -0.963 2.685 |

| in_new_england | -1.2589 | 0.674 | -1.869 | 0.062 | -2.579 0.062 |

| per_black | -0.0331 | 0.012 | -2.662 | 0.008 | -0.057 -0.009 |

new_selected_model.get_margeff().summary()

| Dep. Variable: | repub |

|---|---|

| Method: | dydx |

| At: | overall |

| dy/dx | std err | z | P>|z| | [95.0% Conf. Int.] | |

|---|---|---|---|---|---|

| per_age65 | -0.0298 | 0.008 | -3.815 | 0.000 | -0.045 -0.014 |

| city | -0.1185 | 0.048 | -2.450 | 0.014 | -0.213 -0.024 |

| coast | -0.1016 | 0.043 | -2.357 | 0.018 | -0.186 -0.017 |

| per_construc | 0.0775 | 0.034 | 2.268 | 0.023 | 0.011 0.144 |

| per_cvllbrfr | -0.0336 | 0.007 | -4.810 | 0.000 | -0.047 -0.020 |

| per_finance | 0.0581 | 0.023 | 2.570 | 0.010 | 0.014 0.102 |

| per_gvtwrkr | -0.0294 | 0.011 | -2.615 | 0.009 | -0.051 -0.007 |

| miltinst | 0.0504 | 0.019 | 2.698 | 0.007 | 0.014 0.087 |

| per_miltpop | -0.0625 | 0.019 | -3.257 | 0.001 | -0.100 -0.025 |

| per_transprt | -0.0502 | 0.038 | -1.333 | 0.183 | -0.124 0.024 |

| per_unemplyd | -0.2859 | 0.041 | -7.044 | 0.000 | -0.365 -0.206 |

| in_rocky_mountain | 0.1403 | 0.151 | 0.928 | 0.353 | -0.156 0.437 |

| in_new_england | -0.2051 | 0.108 | -1.898 | 0.058 | -0.417 0.007 |

| per_black | -0.0054 | 0.002 | -2.737 | 0.006 | -0.009 -0.002 |

new_selected_model.bic, selected_model.bic, controlled_model.bic

(515.97027448372125, 517.71751551732223, 541.47425095794426)

The percentage of unemployment has been restored to it’s former prominence. Other important variables (statistically and magnitude) include coast, construction workers, and New England. New England has the largest magnitude with a 30% reduction in probability of being republican if the district is in the region. The new model also has the lowest BIC.

Lets look at the predictive power:

new_selected_predictions = np.array([1 if x >= 0.5 else 0 for x in new_selected_model.predict()])

print classification_report(congress.repub, new_selected_predictions)

print "Confusion matrix:\n"

print confusion_matrix(congress.repub, new_selected_predictions)

precision recall f1-score support

0.0 0.75 0.70 0.72 207

1.0 0.74 0.79 0.76 228

avg / total 0.74 0.74 0.74 435

Confusion matrix:

[[144 63]

[ 49 179]]

These are actually worse predictions that the previous controlled model. We choose that model instead.

Conclusion

While there are certainly more powerful (prediction wise) algorithms out there, logistic regression is nice because it can be interpreted meaningfully and actionably. With the help of xgboost, I picked out some features that I wouldn’t have otherwise and was able to improve the logit model. After controlling for region, I achieved the best results, with in class predictions at 71% and 83% for democrats and republicans, respectively. With more detailed information on things like district religious preferences, age distributions, previous voting preferences etc, I’m sure that the model would make much better predictions.

Thanks for following along! :)